🚀 Kafka Unleashed: The Backbone of Real-Time Data Processing! 🔥📊

🚀 Kafka Unleashed: The Backbone of Real-Time Data Processing! 🔥📊

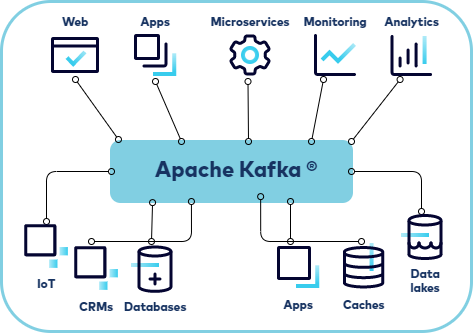

In today’s hyper-connected world, where data is the new oil 🛢️, businesses can’t afford to wait. Enter Apache Kafka — a distributed streaming platform that’s redefining how companies handle real-time data at scale. But what exactly is Kafka? Why is it everywhere — from fintech to social media? Let’s break it down. 💡

🧠 What is Apache Kafka?

Apache Kafka is an open-source distributed event streaming platform used by thousands of companies for high-performance data pipelines, streaming analytics, data integration, and mission-critical applications.

In simpler terms:

👉 Kafka is like a giant, real-time message bus that lets applications publish and subscribe to streams of records in a fault-tolerant, scalable way.

🧐 Why Kafka? What’s the Big Deal?

Before Kafka, systems often relied on point-to-point communication (like REST APIs or database polling) which is:

- ❌ Slow

- ❌ Error-prone

- ❌ Not real-time

Kafka solved this with:

✅ High throughput

✅ Low latency

✅ Horizontal scalability

✅ Durability and fault tolerance

It’s like moving from letters-by-post 📬 to WhatsApp messages 💬 — instant, reliable, and scalable.

🌟 Core Features of Kafka (with Real Use-Cases!)

1. 📦 Publisher-Subscriber (Pub/Sub) Model

- What it does: Producers publish data to Kafka topics, and consumers subscribe to them.

- Use Case: A stock trading app 📈 where real-time market data is published, and different modules (like pricing engine, UI, alert system) consume this data in parallel.

2. 🧱 Durability and Fault Tolerance

- What it does: Kafka stores messages on disk and replicates them across servers.

- Use Case: Banking transactions 💳 — even if a node fails, the transaction logs are safe and can be recovered.

3. 📡 Scalability

- What it does: Kafka can scale horizontally by adding more brokers or partitions.

- Use Case: A food delivery platform 🍕 handling thousands of orders per second during peak time.

4. 🕰️ Real-Time Streaming

- What it does: Kafka enables event stream processing via Kafka Streams or tools like Apache Flink.

- Use Case: Fraud detection 🚨 in credit card payments, where anomalies are detected instantly and blocked.

5. 📚 Message Retention

- What it does: Kafka can store messages for a configured time (from minutes to forever).

- Use Case: Analytics dashboards 🧮 that need to reprocess historical data when queries change.

6. 🔌 Integration Friendly

- What it does: Kafka Connect allows easy integration with databases, storage systems, and stream processors.

- Use Case: Syncing data from MySQL to Elasticsearch in real-time for search functionality 🔍.

🧪 Example: Kafka in Action

Use Case: E-Commerce Order Tracking System 🛍️

Flow:

- 🧑💻 Customer places an order → “Order Placed” event sent to Kafka.

- 🏭 Warehouse Service picks it up → updates inventory.

- 🚚 Logistics Service subscribes → schedules delivery.

- 📱 Notification Service → sends email/SMS to customer.

Each of these services are decoupled and can work independently — thanks to Kafka! 🧩

🏢 How Businesses Can Leverage Kafka

✅ Microservices Communication

Kafka acts as a central nervous system for microservices to exchange data asynchronously and reliably.

✅ Data Lakes and Pipelines

Ingest data from multiple sources (apps, logs, DBs) and stream into data lakes (like S3) or analytics engines in real time.

✅ Customer Behavior Tracking

Track user activity in real-time across platforms to offer personalized recommendations, promotions, and insights. 📊

✅ IoT and Sensor Networks

Kafka can handle millions of sensor updates in real-time for industries like manufacturing, agriculture, and smart cities. 🌆

🛠️ Getting Started with Kafka (Code Example)

Here’s a quick example using Python and Kafka-Python:

🔸 Producer (sending messages):

from kafka import KafkaProducer

producer = KafkaProducer(bootstrap_servers='localhost:9092')

producer.send('order_topic', b'Order ID 12345 placed')

producer.flush()🔹 Consumer (receiving messages):

from kafka import KafkaConsumer

consumer = KafkaConsumer('order_topic', bootstrap_servers='localhost:9092')

for message in consumer:

print(f"Received: {message.value.decode()}")Simple, fast, and powerful! 💥

⚙️ Tools in the Kafka Ecosystem

🔚 Final Thoughts: Kafka is More Than a Queue 🎯

Kafka is not just a messaging queue — it’s a complete event streaming platform. Whether you’re building real-time analytics, high-performance microservices, or event-driven systems, Kafka offers the speed, reliability, and flexibility modern apps need.

💬 “Kafka is to data what the internet is to communication — real-time, scalable, and unstoppable.”

💬 Let’s Connect

If you found this useful, share it with your tech circle! 💼

Want more blogs like this on backend tools, DevOps, or system design?

🔔 Follow me for regular posts that decode complex tech with simplicity!

Comments

Post a Comment